When sales volumes are too low to run traditional A/B incrementality tests, I get it — the fear of wasting budget or losing momentum can paralyze any marketer. Over the years I've worked with small e-commerce brands and niche B2B products where every sale matters. I learned to treat low-volume testing as a design challenge rather than a blocker. Below I share practical, low-cost methods I use to estimate incrementality on Facebook and Google when traditional experiments aren't feasible.

Start with a clear definition of incrementality

Before designing any test, I make sure the team agrees on what incrementality means for our business: is it new purchasers, revenue net of cannibalized sales, lift in lead form submissions, or longer-term LTV uplift? Clarifying this determines which proxy metrics we can safely use when sample sizes are small. For example, if one sale equals a high lifetime value, you may accept fewer primary events and rely on leading signals like add-to-cart or qualified leads.

Use proxy metrics and early signals

When conversions are rare, I rely on correlated events that occur more frequently:

These are noisy, so I always run a short historical correlation check: does a lift in the proxy metric historically correspond to a lift in purchases? If yes, the proxy can stand in for a limited-time experiment.

Implement holdout/ghost tests at minimal cost

Full-scale holdout tests (turn ads off entirely for a control group) can be expensive. I prefer low-cost variants:

Leverage platform tools smartly

Both Facebook (Meta) and Google provide experimentation tools that can be adapted for low volume:

Pool data across campaigns and time

I often aggregate signals across similar campaigns, audiences, or creatives to increase statistical power. For example, if three product variants get separate campaigns each with low conversions, pool them into a single experiment that treats them as random draws from the same distribution. Be transparent about the pooling assumptions and check homogeneity first.

Use Bayesian and sequential testing

Classic statistical tests need large N. Bayesian methods are friendlier to small-sample situations because they incorporate prior knowledge and produce probabilistic estimates of lift rather than strict p-values. I use simple Bayesian uplift models to answer questions like “what is the probability this campaign produces >10% lift?” Sequential testing lets me update beliefs as data accrues and stop early if the test is convincingly positive or negative.

Employ predictive uplift modeling and matching

When experiments are impossible, observational techniques can approximate incrementality:

These approaches require careful validation. I always back-test them on historical windows where I can create a synthetic experiment to estimate bias.

Design ultra-low-cost experiments: sample size & duration guide

For quick planning, here’s a simple table I use to prioritize tests based on available monthly conversions and acceptable relative lift to detect:

| Monthly conversions | Minimum detectable lift (relative) | Recommended approach |

|---|---|---|

| 0–20 | >50% | Proxy metrics, pooling across segments, Bayesian priors |

| 20–50 | 30–50% | Small geo/time holdouts, pooled experiments, sequential testing |

| 50–200 | 15–30% | Platform split tests, creative holdouts, propensity matching |

| 200+ | 10–15% | Standard randomized holdouts, full design experiments |

Note: these are rough heuristics — your variance and conversion value distribution will change the real numbers.

Reduce noise through better attribution and data hygiene

Lower noise directly improves incrementality detection. I focus on:

Control for external factors and seasonality

Small tests are more vulnerable to confounders. I always run quick checks: was there a promotional email, PR mention, or competitor activity that could explain short-term lifts? If possible, schedule tests during stable periods and exclude known campaign bursts from the test window.

Creative and message-first experiments

Sometimes the biggest gains come from creative changes, not audience tweaks. Creative tests are cheaper: rotate creatives in the same campaign and treat the lowest-performing creative as a pseudo-control. Because impressions are abundant even with low conversions, these experiments can reveal meaningful CTR and downstream lift without expensive holdouts.

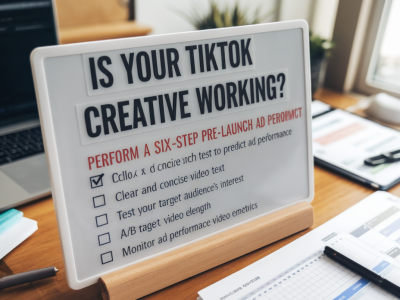

Practical checklist before you launch

I’ve used these approaches to prove incremental value for clients who were convinced testing was impossible. The secret is combining rigorous thinking with practical shortcuts: use higher-frequency proxies, reduce noise, and choose statistical techniques designed for small samples. Done right, low-cost incrementality testing on Facebook and Google becomes less about large budgets and more about smart design and better data.